Michele Fusaroli

@FusaroliMichele

Pharmacovigilance Scientist, Uppsala Monitoring Centre

Disproportionality analysis is pharmacovigilance’s signal-detection workhorse. But more reports do not mean better evidence, and crude results can mislead.

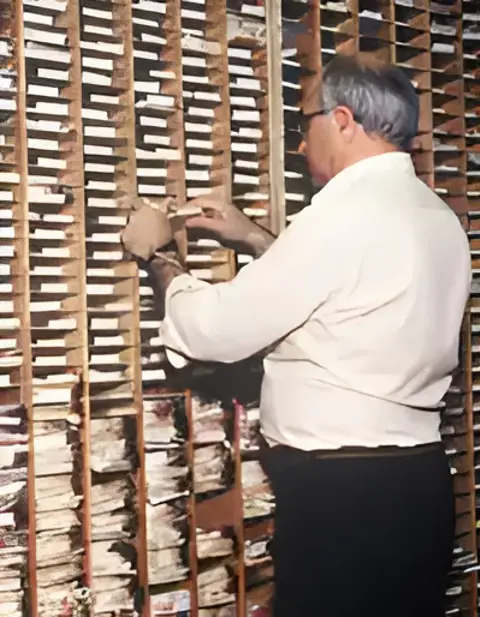

Pharmacovigilance didn’t start with big data or fancy algorithms. In its early days, safety signals emerged from the careful clinical assessment of handfuls of index cases, in the search for a detail or pattern that could point to a yet unknown adverse drug reaction. As the volume of reports surged, relying solely on expert judgement became unfeasible and ineffective. The field needed quantitative tools that could help sift through thousands – eventually millions – of reports to spot early signs of harm.

That is where disproportionality analysis stepped in. At its core, disproportionality analysis asks whether a particular drug–event combination appears more often than expected, given the overall reporting patterns in the database. Over the past decades, it has become the workhorse of signal detection, at least in organisations with a large influx of reports. It is now widely used, well-established, and deeply embedded in regulatory practice.

Traditionally, its purpose was straightforward: to help generate new hypotheses of adverse drug reactions – an early, data-driven flagging of combinations worth investigating further.

Today, however, a growing number of published disproportionality studies use these methods for more than generating new hypotheses, attempting to bring further evidence in support or against existing signals, or to characterise or refine already established adverse drug reactions.

Statistical signals obtained with crude disproportionality analysis are preliminary hypotheses, not evidence for clinical or public health action. Disproportionality analyses of adverse event reports can be distorted by many biases, including confounding, colliders, measurement errors, and reporting biases, making results difficult to interpret and easy to misuse. Before a statistical signal can inform decisions, it must undergo the full signal management process, including validation (ensuring sufficient evidential support, case-level review, checking whether a signal is novel or if it refers to an already established reaction, and excluding major biases), prioritisation, and synthesising all available evidence.

However, hundreds of statistical signals are generated annually and are often published in the literature without appropriate clinical review and assessment under the assumption that more data automatically means better evidence. But in pharmacovigilance, this assumption can be dangerously wrong, as it diverts limited time and expertise away from the follow-up needed to ensure medicines safety.

The term ‘Pharmacovigilance Syndrome’ was coined by Greenblatt in 2015 to describe the practice of irresponsibly publishing any disproportionality analysis, expecting readers to correctly interpret it and some undefined ‘other’ to bring the hypothesis forward in the signal management process. While institutions and regulatory agencies exist that manage signals, too many signals can overflow and clog the system, consuming pharmacovigilance resources and diverting attention from signals with stronger evidential support. Despite this, statistical signals of disproportionate reporting are increasingly published without adequate follow-up. Many of these publications remain unprocessed and can generate unjustified alarms, raising concerns about scientific quality and public health impact.

Faced with this clearly concerning situation, the pharmacovigilance community must ask what the right path forward is to ultimately ensure the safer use of medicines for everyone, everywhere. The answer is far from unanimous. One possible solution is to drop disproportionality completely from publications: a dead tree that should be cut down before it poisons the forest around it. Another possibility is to use disproportionality with greater awareness, pruning dead branches and taking care of the ones that bear fruit. More plausibly, it may be necessary both to stop publishing papers resting solely on disproportionality and to promote studies that integrate better-qualified disproportionality analyses backed by full clinical assessment. Such an approach would allow retention of the precious fruits that only adverse event reports can offer, but require more rigorous engagement with the disproportionality method’s many limitations.

For all its usefulness, disproportionality analysis comes with a baggage cart full of problems that too often gets pushed quietly into the ‘Limitations’ section – acknowledged, yes, but rarely addressed. That is part of the issue: the pharmacovigilance field has grown comfortable pointing at the cracks instead of fixing them.

One core challenge is how malleable disproportionality analyses are in the absence of a framework for choosing one analytical set-up over another. Change the background database, adjust the time window, switch the reference group, nudge a threshold, include a MedDRA term in the case definition, and a disproportionality may suddenly appear or vanish.

This creates a perfect playground for unintentional or intentional p-hacking: tuning parameters until results look publishable. Because reporting of these decisions is often incomplete, readers are left guessing which analytical ‘knobs’ were turned and what the results truly mean.

Beyond this methodological fragility lies a deeper structural problem. Disproportionality relies entirely on adverse event reports, a data source that, while invaluable, has important limitations that must be considered to avoid spectacular, and potentially harmful, failures:

Because of these issues, disproportionality measures are not enough to establish the existence of a causal relationship between a drug and an adverse event. They can be suggestive, sometimes intriguingly so, but they are not stable proxies for causality. Yet in many publications, these limitations are treated as disclaimers rather than analytical challenges: statements added out of moral duty rather than methodological rigour. The implicit hope seems to be that “Yes, this might be biased, but surely someone else will sort it out later.”

That hope fuels the proliferation of low-quality signals. If disproportionality is to remain part of the scientific toolbox, rather than a relic to be retired, its weaknesses must be addressed directly rather than being treated as unavoidable footnotes. Just as epidemiology evolved beyond crude associations, pharmacovigilance must move past crude disproportionality.

The task ahead is not to abandon the method, nor to defend it blindly, but to take it seriously: to understand when it is informative, when it is misleading, and how to meaningfully account for the biases inherent in adverse event reports databases. Anything less reduces disproportionality to ritual and statistical exercise rather than a scientific tool, helping no one, least of all the patients it ultimately seeks to protect.

Read more

DJ Greenblatt, "The Pharmacovigilance Syndrome”, Journal of Clinical Psychopharmacology, 2015.

Egypt's swift detection of vincristine-associated necrotising infections demonstrates how effective pharmacovigilance can identify risks and protect patients globally.

08 May 2025

The safety of orphan drugs is hard to monitor as they target rare diseases afflicting small patient populations. However, an initiative led by the SFDA aims to improve this.

12 August 2024

South Africa’s pharmacovigilance system has been evolving for over a decade with UMC’s data management system supporting them every step of the way.

02 September 2024